I’ve decided to move my blog to a new location so that I can do different things and I’m not restricted by the free version of wordpress. If you want to continue to follow my blog or came here via another method please check out my new blog location at http://www.ramsmusings.com

Raygun.io vs. Airbrake.io – .NET Error Logging Comparison

Today I’m going to talk about two SAAS based error logging solutions for .NET; Raygun.io and Airbrake.io. I was recently contacted by the community manager for Raygun and was asked to give their solution a try. I was already using Airbrake but had not been very impressed with the level of detail we were getting with Airbrake. While Airbrake was working really well for our Ruby based projects it wasn’t reporting the same level of details for our .NET projects. I decided to give Raygun a try and I’m super happy that I did. Let me cover some of the reasons why I like Raygun over Airbrake.

Raygun provides a super easy implementation to get started with using their product. You can download their library from NuGet via searching for their package name; Mindscape.Raygun4Net. Once Raygun is installed into the project you are working on, you are ready to go. Raygun even generates the code you will need to create a reference to their library along with your specific project key that Raygun uses to ensure you are the only one that can view your errors and that your errors do not appear in another users account. Using a key is standard practice for third party SAAS based solutions to keep information segmented but most solutions do not generate code for you to get started. While it is only 1 line of code it still is a nice little feature that can be taken for granted by many developers. After the library is initialized it takes one more line of code, at minimum, to start sending errors to Raygun. Here is an example of all the code that is necessary to get started.

public void SendErrorToThirdPartyLogger(Exception ex)

{

var client = new RaygunClient("raygun_api_key");

client.Send(ex);

}

It doesn’t get much easier then that to get started with sending your exceptions to a third party solution. All the instructions to get started will be listed for you in your account on Raygun’s website.

Airbrake is very similar to Raygun when it comes to installing it however there are a few differences. You can download the Airbrake library from NuGet but don’t try searching for Airbrake because the package you need is called Sharpbrake. The Sharpbrake package is also dependent upon another package titled Common.Logging but that will be automatically installed by NuGet. Once you have the Sharpbrake package installed you’ll need to go through 1 more step of configuring the api key and a few other settings in your applications .config file. This is one step that Raygun didn’t need. Once you setup your .config file with the below parameters you can get started using Sharpbrake in your application. Below is an example of the settings you need to implement in your .config file as well as some sample code to actually log errors to Airbrake.

Config File Settings Example for Airbrake

<appSettings> <add key="Airbrake.ApiKey" value="1234567890abcdefg" /> <add key="Airbrake.Environment" value="Whatever" /> <add key="Airbrake.ServerUri" value="Airbrake server url (optional)" /> </appSettings>

Simple Sharpbrake Implementation Example

public static void SendErrorToThirdPartyLogger(Exception ex)

{

ex.SendToAirbrake();

}

Although Sharpbrake is only 1 line of code the extra steps of configuring your applications config file is the difference in the setup between Airbrake and Raygun. I think this is where the simplicity of Raygun comes into play. Here’s one example why. When I implemented Airbrake’s solution it was in a Windows Service application. To get errors to be logged to Airbrake I had to implement the Airbrake config settings in the windows service project and not in the config file of the project in which I had the method to log errors to Airbrake. This in turn caused me to have to implement the Sharpbrake and Common.Logging libraries in the windows service project since that was where the config settings were. This meant that I had to have the libraries in 2 projects; the windows service project and the project that had the actual logging code. I had to do this in approx. 5 different solutions because I have 5 different windows services I was using. Keeping track of references and config file settings is not something that is easy to do and requires a good bit of automation to ensure no issues occur when moving into different environments. To be able to change the environment config setting per environment I had to ensure that this value was updated during our build process for each environment. If you don’t have an automated build process this can be a tedious task that can lead to mistakes.

As far as pricing for Raygun and Airbrake they are both pretty similar and offer different pricing options for any number of budgets. However one thing that Airbrake does have that Raygun doesn’t have is a free tier. Now you don’t get a whole lot with the free tier on Airbrake but having something is better then nothing. The smallest option for Raygun is the micro option which is $9 a month and includes 1 monitored application, unlimited users, 14 days of data retention and up to 5k errors per month that can be stored. I don’t know about you but if I have 5k errors in my application in a month then I have serious issues and probably shouldn’t be writing code. So let’s say you are a small business and really want to be able to monitor all three of your environments for your application; development, staging and production. The next package up that Raygun offers is $29 a month which gives you 5 applications but you might only really need 3 for your 1 application and you just feel that $29 a month is too much to spend for tracking your errors. Here is a little tip that you can use to stay on the micro tier. Raygun offers that ability for you to add tags to the errors you send in to Raygun. Here is an example of what I did while testing out Raygun.

public void SendErrorToThirdPartyLogger(Exception ex)

{

// set a default environment

var environment = "development";

// grab the environment setting from our app.config file

if (ConfigurationManager.AppSettings["env"] != null)

environment = ConfigurationManager.AppSettings["env"].Trim();

// create a new tag element that contains the current environment

var tags = new List<string> {environment};

// initialize the raygun client

var client = new RaygunClient("raygun_api_key");

// send the error to raygun

client.Send(ex, tags);

}

I used our app.config file to set the environment during our build process so everything was automated and I didn’t have to worry about manually changing the environment setting. Now whenever an error comes into Raygun I can check to see what environment the issue occurred in and determine the priority of the error.

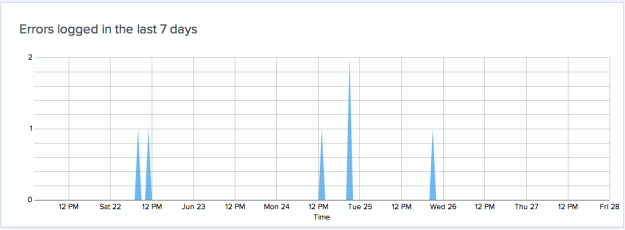

Moving on to the Raygun portal this is where the big difference between Raygun and Airbrake come in to play in my opinion. Raygun provides a really nice graph that shows how many errors occurred over the past seven days. For one it provides a quick visual that easily allows me to see if a new release is causing a lot of errors. Airbrake doesn’t have any charts or graphs like this. Here is a screenshot of the graph.

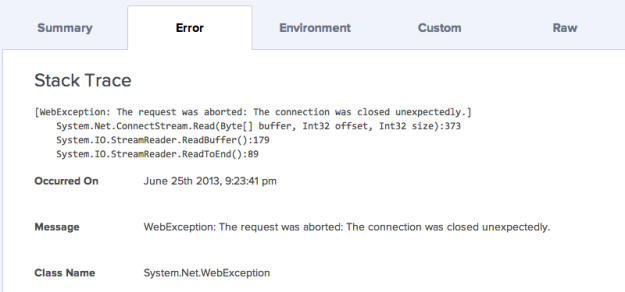

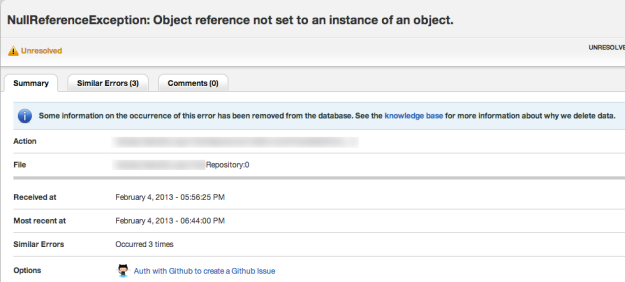

Finally I’d like to discuss the major difference between Raygun and Airbrake and that is the level of detail you get. Raygun provides a full stacktrace of the error, the time of the error, the class name for the error and even the server environment specs. With Airbrake you only get the time of the error and very basic error information. You don’t get a full stacktrace of the error or any environment specs. Not having the full stacktrace really hinders your ability to troubleshoot issues in a timely manner and in a production environment fixing issues quickly is key. Here are some sample screen shots from actual errors from both solutions. I would like to note that I also cut out some of the details in the Raygun screenshot as they were specific to my application and I didn’t want to give out details of my application.

Raygun.io error detail

Airbrake.io error detail

As you can see from the screen shots there really is no comparison to the level of detail you get between Raygun and Airbrake. Raygun is far superior.

I’ve been using Raygun for just over 2 weeks now and I’ve been really impressed. Installation was super easy and it actually took me longer to remove all the Sharpbrake setup and Nuget package installations then it took me to install and start using Raygun. Pricing for both solutions is reasonable but I feel that you get more with Raygun for the price you pay then you do with Airbrake. My only complaint is that both Raygun and Airbrake need to do a better job with getting their sites working on mobile devices. I had a hard time viewing error information on my iPhone and if I’m out and about and errors come in I want to know exactly what the problem is. You might be asking how do you know if errors occur when you are not in front of your computer. Both solutions offer email notifications when errors come in so you can stay on top of things which is a great feature and probably worth the cost of either solution alone in my opinion.

Finally, I would like to note that this comparison is just based on the .NET implementations of both solutions. My colleague Scott Bradley will be doing a blog post soon about comparing the ruby implementations of Raygun vs. Airbrake. Here is a link to his blog.

Well that’s it for today. Hopefully this helped give you an overview of Raygun.io and Airbrake.io and why I would choose Raygun.io over Airbrake.io for .NET error logging.

Auto-Incrementing Keys with NoSQL Solutions

Today I’d like to follow up on one of my previous posts on Couchbase Keys and talk a little bit about creating auto-incrementing keys in NoSQL solutions. I’ll focus on Couchbase as that is one of the NoSQL solutions I currently use but the same principles can also apply with other NoSQL solutions.

If you are coming from a SQL environment you are probably pretty familiar with auto-incrementing primary keys but in a NoSQL database there is no such thing as a primary key or auto-incrementing key so you have to make your own. There are also other key types you can create within a NoSQL database but what happens when you can’t come up with a good key solution? Let’s use a typical scenario that most websites all have; user accounts. You might think that you can use the username or email address or a combination of a username and email address or a phone number or the users first and last name or some other user account property but there are a few problems if you decide to go this route. What happens if the user wants to change their username or updates their email address or gets married and changes their last name or changes their phone number? You would then have to update your document key which could then lead to cascading changes and other issues. What happens if you miss a document that needed to be updated with the new key? You could end up with a real mess on your hands. This is where an auto-incrementing key comes in handy.

Another major benefit of using the auto-incrementing key is that it makes it really easy to enumerate through all of your documents. This also eliminates the need for you to use a view to retrieve your documents. You can read more about why using a view is not a great idea by checking out my previous post on Couchbase keys. I’ll show you an example of enumerating through all your documents later on in this post.

The implementation of an auto-incrementing key is going to use what’s called the Lookup Pattern. The first thing you want to do is create a master counter document. This document will hold the current number of documents that have been created and will be used to get the next id value to use in your key. To create your master counter document you will want to create a unique key; let’s go with something like user_account::count. This will make it easy to lookup this document and it is self documenting for what we are going to use it for. In Couchbase there is a client function that we can use called Increment to increase the value this document will hold. We only need to place an integer value in the document as the Increment method will handle incrementing this value when the increment client method is called. Let’s see a couple code examples below on how to create the master counter document. I’ve provided examples in both C# and Ruby.

C#

// initialize the counter document. this only needs to be done once. var client = new CouchbaseClient(); client.Store(StoreMode.Add, "user_account::count", 0)

Ruby

# initialize the counter document. this only needs to be done once.

client = Couchbase.new

client.add("user_account::count", 0)

Once the master counter document is created you can increment the number in the document using the following line of code:

C#

current_counter_value = client.Increment("user_account::count")

Ruby

current_counter_value = client.incr("user_account::count")

As you can see it’s a very simple method to increment the value in the master counter document. Also, you don’t have to start the counter at 0, you can use whatever number you want. So if you want to start at 1000 just pass 1000 in instead of 0. The return value is the latest counter value so if the existing value before calling the Increment method was 5 then the return value would be 6. Once we have the latest counter value we can then use that value to create our user account document key. Continuing with the value of 6 we will then create a new user account document using a key of “user_account::6”. The next document key we create will be “user_account::7” and so on. Below is an entire example of creating the master counter document, incrementing the value and then creating a new user account document.

C#

// create the master counter document

var client = new CouchbaseClient();

client.Store(StoreMode.Add, "user_account::count", 0)

// increment the counter (This isn't actually necessary since we just initialized the document but

// for illustrative purposes and completeness I wanted to show all the steps I've talked about so far.)

current_counter_value = client.Increment("user_account::count")

// create the document key of "user_account::1" since we just incremented our master counter document value

var docKey = "user_account::" + current_counter_value;

var user_acct = new UserAccount

{

Key = docKey,

FirstName = "Skippy",

LastName = "JohnJones",

Email = "skippyjj@email.com",

PhoneNumber = "555-555-5123"

};

client.Store(StoreMode.Add, docKey, user_acct);

client.Disconnect();

Ruby

require 'rubygems'

require 'couchbase'

# create the master counter document

client = Couchbase.new

client.set("user_account::count", 0)

# increment the counter (This isn't actually necessary since we just initialized the document but

# for illustrative purposes and completeness I wanted to show all the steps I've talked about so far.)

current_counter_value = client.incr("user_account::count")

# create the document key of "user_account::1" since we just incremented our master counter document value

docKey = "user_account::#{current_counter_value}"

user_acct = new UserAccount {

:key => docKey,

:first_name => "Skippy",

:last_name => "JohnJones",

:email => "skippyjj@email.com",

:phone_number => "555-555-5123"

}

client.add(docKey, user_acct);

client.disconnect

That’s all there is to it to get started using an auto-incrementing key with Couchbase. You might be asking yourself how the heck do I lookup a document. Well I’m about to show you.

C#

// get the current counter value

current_counter_value = client.Get("user_account::count");

// form the key to get the latest user account document

var user_acct = client.Get("user_account::" + current_counter_value);

Ruby

# get the current counter value

current_counter_value = client.get("user_account::count")

# form the key to get the latest user account document

user_acct = client.get("user_account::#{current_counter_value}")

Once you have things up and running with this pattern, enumerating through all your documents is pretty easy. Here is an example on how to do that using the user_account info shown above.

C#

current_counter_value = client.Get("user_account::count");

for (int i = 0; i < current_counter_value; i++)

{

var user_account = client.Get("user_account::" + i);

// do something with each user_account document

}

Ruby

# get the current counter value

current_counter_value = client.get("user_account::count")

[0..current_counter_value].each |i| do

user_account = client.get("user_account::#{i}")

# do something with the user account document

end

So that’s pretty much it. Overall, using the atomic counter and the lookup pattern in a NoSQL solution is a great way to create keys that can be used to retrieve the document in a very fast, efficient manner, while still creating a unique key that is easy to remember. Also keep in mind that atomic counter operations are always executed in order and are the fastest to perform get, set, increment and decrement operations. So now you have a simple way to generate unique auto incrementing keys just like you do in SQL databases.

Optimistic Locking with Couchbase

Today I’d like to spend some time covering how to handle optimistic locking using the .NET Couchbase client. Couchbase covers optimistic locking pretty well when talking about the CAS (check and set) method options they have within their documentation. I tried doing some research on Google to see if I could find any good examples of an actual implementation via the .NET client but was unable to find anything so naturally I created my own way and hopefully by writing this blog post I will be able to help someone else out in the future.

I’ll focus on the update operation here as that is mainly what I care about when it comes to optimistic locking. The important thing to remember is that when you create your Couchbase model you will want to ensure you have a property that can hold the CAS value that Couchbase will create when you first store a document or update a document. In my case I have a base model class that has 3 properties; Key, CasValue, and Type. Then each model inherits the model base so that these properties are available on every model. When I create a document or get a document I set the CasValue and Key value so that my logic can use those values later on. The Key property is the document key that can be used to retrieve the document in the future from Couchbase. The Type property is an abstract property and is overridden by each model with the appropriate model type. This property will make it easy to narrow our focus down when creating views within Couchbase. The CasValue property is the CAS value that is generated by Couchbase and is the key to optimistic locking. When I want to update an existing document in Couchbase I need to be sure that the CasValue matches the CasValue currently in Couchbase for the document I’m trying to update. If the value in Couchbase is different, that means another process has updated the document so we need to get the latest document and apply the changes to the document and then re-save the document. Otherwise the existing updates done by the other process will be overwritten. To accomplish this task I used an anonymous delegate also known as a lambda expression. Below is the code created for handling the update and retrying if we find that the document has been altered since we last retrieved it from Couchbase. I’ve left out some details like how the CreateDocument method works so that you can try to add that logic yourself.

/// Load a clean copy of the model and passes it to the given

/// block, which should apply changes to that model that can

/// be retried from scratch multiple times until successful.

///

/// This method will return the final state of the saved model.

/// The caller should use this afterward, instead of the instance

/// it had passed in to the call.

///

///The model.

///The block.

/// the latest version of the model provided to the method

public virtual T UpdateDocumentWithRetry(T model, Action block)

{

var documentKey = model.Key;

var success = false;

T documentToReturn = null;

while (!success)

{

// load a clean copy of the document

var latestDocument = GetDocument(documentKey);

// if we were unable to find a document then create the document and

// return the latest version of the document to the caller

if (latestDocument == null)

{

// document doesn't exist so Add it here and then exit the

// method returning the document that was saved to Couchbase

return CreateDocument(model);

}

// pass the latest document to the given block so the latest changes can be applied

if (block != null)

block(latestDocument);

var latestDocumentJson = latestDocument.ToJson();

var storeResult = Client.ExecuteCas(StoreMode.Replace, latestDocument.Key, latestDocumentJson,

latestDocument.CasValue);

if (!storeResult.Success)

continue;

documentToReturn = latestDocument;

success = true;

}

return documentToReturn;

}

Here is a test example of how to use the UpdateDocumentWithRetry code from above. Keep in mind that the below test is just using fictitious information to illustrate how to use the UpdateDocumentWithRetry method and will need to be updated to your data model logic.

[Test]

public void TestUpdateAndRetry()

{

var documentKey = "document::1";

var originalDocument = new Document

{

update_count = 1,

last_update_time = DateTime.UtcNow

};

originalDocument = Client.Store(StoreMode.Add, documentKey, originalDocument);

var doc1 = Client.Get(documentKey);

var doc2 = Client.Get(documentKey);

doc1.update_count = originalDocument.update_count + 1;

doc1 = Client.Store(StoreMode.Set, documentKey, doc1);

var count = 0;

doc2 = UpdateDocumentWithRetry(doc2, doc =>

{

count += 1;

if (count == 1)

{

doc1.update_count = doc1.update_count + 1;

Client.Store(StoreMode.Set, documentKey, doc1);

}

doc.update_count = doc.update_count + 1;

});

Assert.AreEqual(count, 2);

var latestDocument = Client.Get(documentKey);

Assert.AreEqual(doc1.update_count, 3);

Assert.AreEqual(doc2.update_count, 4);

Assert.AreEqual(latestDocument.update_count, 4);

}

Hopefully this will help you get started with implementing optimistic locking in your application with whatever NoSQL solution you are using. You can also read more on CAS operations in the Couchbase .NET 1.2 client by going here: Couchbase CAS Method Documentation

Couchbase Keys

Following up on my previous post, Dealing with Large Data Sets in Couchbase, I wanted to talk a little bit about document keys in Couchbase. In the previous post I mentioned we didn’t create a key for our documents and instead chose to let Couchbase create a key for us. While going this route makes it super quick and easy to get started using Couchbase it does have drawbacks. As pointed out in my previous post mentioned above, you can run into limits with views making it more difficult to get to the documents you want. Another issue you will encounter is figuring out how to get the document you want to deal with. While a view can help you retrieve documents that have a random key think about how you will retrieve a specific document. You will need to add very specific criteria to the view to get the document you need or you will have to add enough criteria to the view to narrow the result set so that you can create further logic in whatever programming language you are using to filter the data down even more to the specific document you want. Using specific criteria in a view to narrow your results to a specific document is a very bad idea. For one, it’s not a reusable method to get other documents. You will either need to update the view each time to get the document you want or you will have to create a new view to get a different document.

Time for some examples. Suppose you have the following documents:

{

name: "Philadelphia Flyers",

sport: "Hockey",

city: "Philadelphia",

state: "PA"

}

{

name: "Philadelphia Union",

sport: "Soccer",

city: "Philadelphia",

state: "PA"

}

{

name: "Washington Capitals",

sport: "Hockey",

city: "Washington",

state: "VA"

}

{

name: "DC United",

sport: "Soccer",

city: "Washington",

state: "VA"

}

Since the documents above do not have a key I can’t just go and get the document with a name of “Philadelphia Union”. In order to get that document I would need a view. Here is an example of the view that would be required to grab the document with a name of “Philadelphia Union”.

function (doc, meta) {

if (doc.sport == "Soccer" && doc.city == "Philadelphia") {

emit(meta.id, null);

}

}

Below is an example of the code, in Ruby, that will be needed in order to query the view to get the document key and then use that key to call Couchbase to get the actual document we want to work with.

#!/usr/bin/env ruby

require 'rubygems'

require 'couchbase'

require 'json'

# Couchbase Server IP

ip = 'localhost'

# Bucket name

bucket_name = 'Your_Bucket_Name'

# Design Doc Name

design_doc_name = 'Your_Doc_Name'

client = Couchbase.connect("http://#{ip}:8091/pools/default/buckets/#{bucket_name}")

# Get all the existing items from your view

design_doc = client.design_docs[design_doc_name]

# Replace "by_id" with the name of your view

view = design_doc.by_id

# loop through all items in the view

view.each do |doc|

# get the view result document key

doc_key = doc.key

# query Couchbase to retrieve the document using the key retrieved from the view

document = JSON.parse(client.get(doc_key, :quiet => false))

# Do whatever you need to do with your document

puts document.inspect

end

client.disconnect

While the view is simple to create and doesn’t take much time, it only returns the document key that was auto created by Couchbase. This means I have to create another request to Couchbase to actually get the document. Now you might be wondering why I didn’t just return the document via the view and that is not a best practice to do. For one, it causes the entire document to be indexed and that isn’t very efficient and it takes up more disk space then is necessary when Couchbase indexes the content. Plus, it is super fast to make multiple queries to Couchbase or any NoSQL solution for that matter. Most developers that come from a SQL environment or background have a hard time transitioning to making multiple queries against the datastore, and for good reason when dealing with SQL but with Couchbase and NoSQL solutions in general they are are built for speed and efficiency in querying data so making multiple requests isn’t a problem.

Now back to the document key issue. Suppose I want to get the soccer team from the city of Washington. I can’t do that using the view that was previously created and I either have to change the view or create a new one to get the new document I want. That isn’t very efficient.

The best way to solve the problem is to take some time to come up with a unique document key that you can remember or reconstruct from other data in your application to retrieve documents. Even if you think you will never need to retrieve a specific document from your dataset you should create a known key for your documents because I can guarantee that at some point in the future you will need to retrieve a specific document and knowing your document key structure will help out tremendously. Let’s look at the same examples I previously used but this time I will add a key that I specifically create so that I can retrieve the document very easily.

{

id: "Hockey::PhiladelphiaFlyers",

name: "Philadelphia Flyers",

sport: "Hockey",

city: "Philadelphia",

state: "PA"

}

{

id: "Soccer::PhiladelphiaUnion",

name: "Philadelphia Union",

sport: "Soccer",

city: "Philadelphia",

state: "PA"

}

{

id: "Soccer::WashingtonCapitals",

name: "Washington Capitals",

sport: "Hockey",

city: "Washington",

state: "VA"

}

{

id: "Soccer::DCUnited",

name: "DC United",

sport: "Soccer",

city: "Washington",

state: "VA"

}

I’ve put together a quick ruby example to retrieve the document for the soccer team in Philadelphia.

#!/usr/bin/env ruby

require 'rubygems'

require 'couchbase'

ip = 'localhost'

bucket_name = 'default'

client = Couchbase.connect("http://#{ip}:8091/pools/default/buckets/#{bucket_name}")

doc = client.get("Soccer::PhiladelphiaUnion", :quiet => false)

puts doc.inspect

client.disconnect

As you can see the code to retrieve a document directly is much shorter and easier to write. Now, if I want to get the hockey team from Philadelphia all I have to do is replace “Soccer::PhiladelphiaUnion” in the client.get method with “Hockey::PhiladelphiaFlyers” and I’ll have my new document.

Hopefully the examples above have helped you realize why it’s important to create a key for your documents. For more in depth help on creating documents for Couchbase I would recommend checking out this site, Couchbase Models, by Jasdeep Jaitla who works at Couchbase.

Dealing with large data sets in Couchbase Views

The other day I ran into an issue while trying to extract data from Couchbase 2.0 into csv files. The problem stemmed from our original document design. We never created a unique known key schema for each document, but instead went with the having Couchbase assign a unique key for each document. In a future post I will expand more on the Key schema designs for Couchbase and why I believe you should create a known unique key value for all of your documents. Back to the issue at hand; I had no way to extract the documents I wanted to extract other then by creating a view. While this isn’t a big deal it threw me for a couple of hours of troubleshooting when I went to extract the documents using the view due to the size of our data. I created a Ruby script using the latest Couchbase gem (1.2.2) as of this writing but ran into an out of memory issue. Below is a screen shot of the issue I was getting:![]()

Here is a sample of the code I was using that caused the problem. Bonus points if you can spot the error.

#!/usr/bin/env ruby

require 'rubygems'

require 'couchbase'

require 'json'

require 'csv'

# Couchbase Server IP

ip = 'localhost'

# Bucket name

bucket_name = 'Your_Bucket_Name'

# Design Doc Name

design_doc_name = 'Your_Doc_Name'

# Output Filename

output_filename = 'extracted_data_file.csv'

client = Couchbase.connect("http://#{ip}:8091/pools/default/buckets/#{bucket_name}")

document_count = 0

# Get all the existing items

design_doc = client.design_docs[design_doc_name]

# Replace "by_id" with the name of your view

view = design_doc.by_id

view.each do |doc|

doc_key = doc.key

# if the document can't be retrieved or it fails to

# be converted to JSON just continue to the next document

begin

document = JSON.parse(client.get(doc_key))

rescue

next

end

# open file for appending. if the file doesn't exist it will be created.

CSV.open(output_filename, 'ab', {:col_sep => '|'}) do |csv|

csv << document.keys if document_count == 0 # write the header row first

csv << document.values # write document values to the csv file

end

document_count += 1

end

client.disconnect

puts "Total Documents Extracted = #{document_count}"

Hopefully you could spot the issue. It took me a little while to find the problem but once I did everything worked great. The main issue is that the view I created was returning over 90MM document keys, which was causing the memory error. The ruby client is supposed to stream the results but it doesn’t appear to be doing that well, otherwise the memory error shouldn’t have been reached. To resolve the issue you need to add the following update to the prior script on line 24:

view = design_doc.by_id(:limit => 10000000, :stale => false)

One thing to note is that in my case I wanted to include stale results so I added :stale => false but that property is optional. If you don’t want stale results to show up in your results just remove that property. For those of you that don’t know what the stale false option does, it updates the index of the view documents before the query is executed thus including any newly inserted documents into the view. The other :stale options are ok and update_after. You can read more about these options in the couchbase 2.0 documentation.

The main thing to focus on is the :limit property. You can play around with the max limit you want to use. In my case I found that 10MM seemed to be the magic value that didn’t cause the out of memory error I was experiencing previously. If I set the :limit value any higher than 10MM I received the out of memory error. Your results could be different so you will have to determine what your limit value should be.

Hope this helps someone in the future as it took me a few hours to figure out how to solve this problem and googling didn't provide much help on this issue.